If you’re keen to learn more on AI, check out our screencast Microsoft Cognitive Services and the Text Analytics API, for AI sentiment in your bot.

Api.ai is a really simple service that allows developers to create their own basic personal AI assistant/chatbot that works a bit like Siri and Amazon’s Alexa. I recently covered how to build your own AI assistant using Api.ai, where I showed the basics of setting up an AI assistant and teaching it some basic small talk. In this article, I’d like to go a step further and introduce “intents” and “contexts”, a way of teaching our AI assistants more specific actions that are personalized to our own needs. This is where things can get really exciting.

Note: this article was updated in 2017 to reflect recent changes to Api.ai.

Building an AI assistant with Api.ai

This post is one of a series of articles aimed to help you get a simple personal assistant running with Api.ai:

- How to Build Your Own AI Assistant Using Api.ai

- Customizing Your Api.ai Assistant with Intent and Context (this one!)

- Empowering Your Api.ai Assistant with Entities

- How to Connect Your Api.ai Assistant to the IoT

What is an Intent?

An intent is a concept that your assistant can be taught to understand and react to with a specific action. An intent contains a range of contexts that we can enter as sentences that the user might say to our assistant. A few examples could include “Order me lunch”, “Show me today’s daily Garfield comic strip”, “Send a random GIF to the SitePoint team on Slack”, “Cheer me up” and so on. Each of those would be custom intents which we could train our assistant to understand.

Creating an Intent

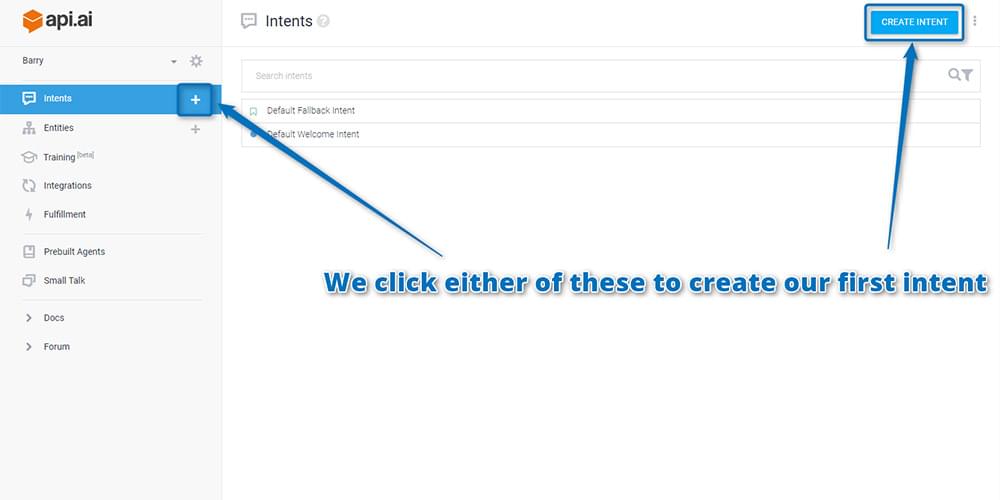

To create an intent, log into the agent you’d like to add the new functionality to in the Api.ai Console Page and click on either the “Create Intent” button next to the “Intents” heading at the top of the page or the “Intents” plus icon in the left hand side menu:

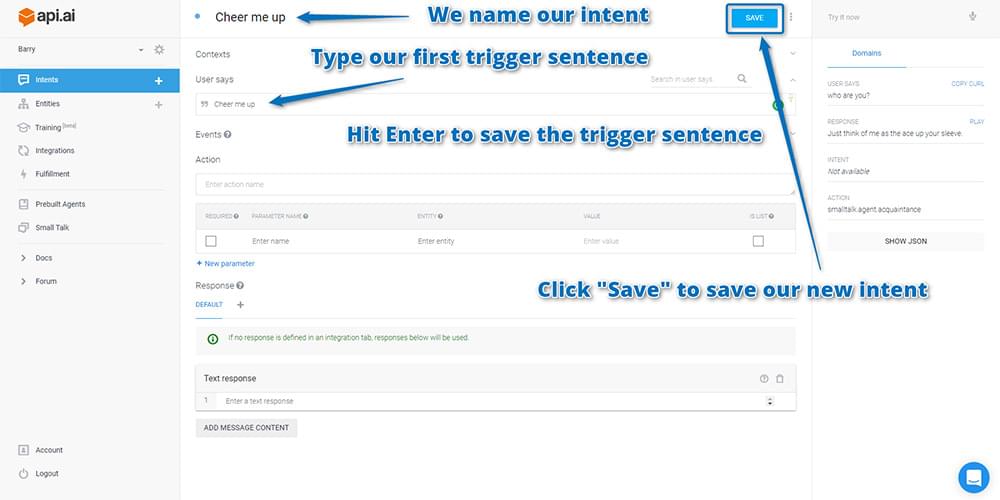

The sample intent for this demo’s assistant is to teach our assistant to cheer people up when they’re feeling down with movie quotes, jokes and other things. To start, call the new intent “Cheer me up” and write your first trigger sentence underneath “User says”. The first sentence I’ve added below is “Cheer me up”. Hit the Enter key or click “Add” to add your sentence:

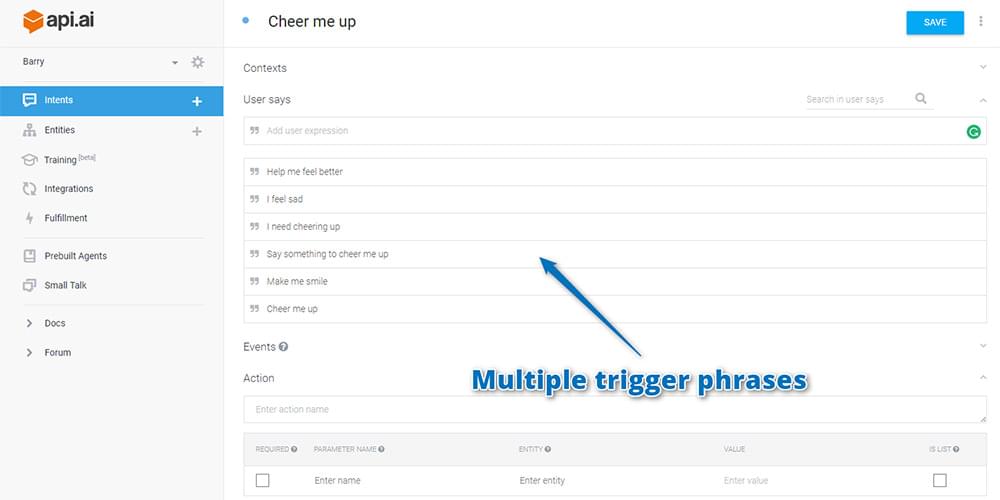

Typically, there’s a range of different ways we might say the same thing. To account for these, add in a range of statements that represent various ways a user might indicate they’d like cheering up, such as “Make me smile” and “I feel sad”:

Typically, there’s a range of different ways we might say the same thing. To account for these, add in a range of statements that represent various ways a user might indicate they’d like cheering up, such as “Make me smile” and “I feel sad”:

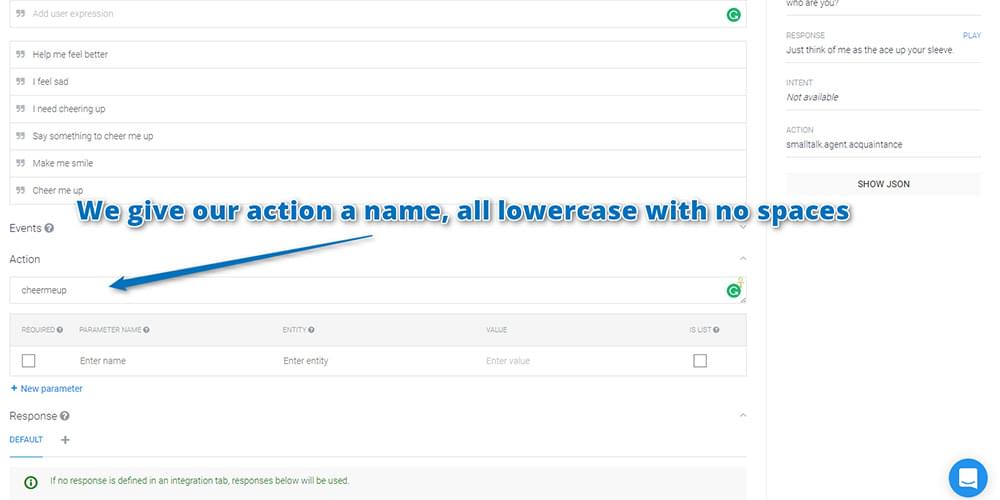

Now there’s a range of sentences the assistant should understand, but you haven’t told it what action is expected when it hears them. To do so, create an “action”. The assistant will return “action” names back to your web app to allow it to respond.

In this case, you won’t respond to the first action that’s been called “cheermeup”, but it will come in handy in future when responding to actions in your web app. I’d recommend always including action names for your intents.

You can add in parameters into your actions too, but I’ll cover that in detail within our next article on Api.ai!

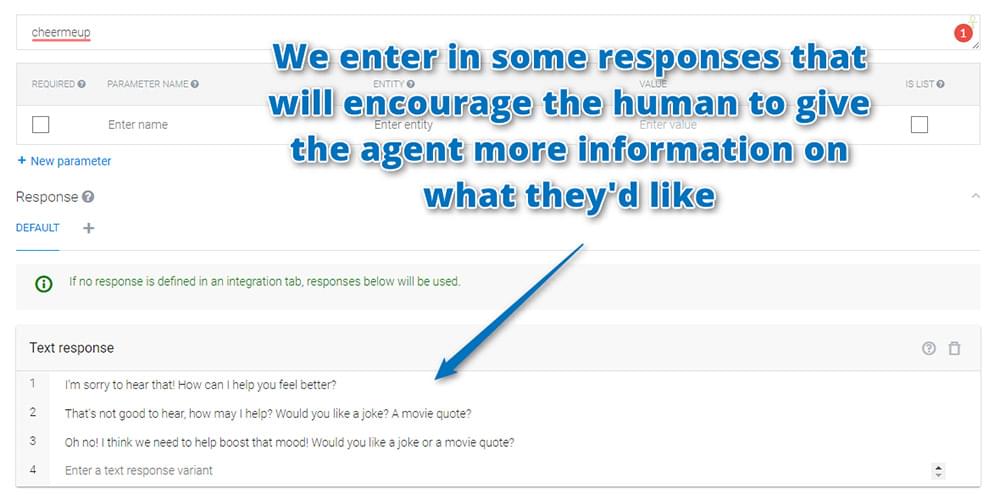

Guiding Via Speech Response

After your user has told the agent they’d like to be cheered up, you want to guide the conversation towards the user, telling the agent more about what they’d like. This helps provide the illusion of intelligence while limiting how much the chatbot needs to handle. To do so, you provide speech responses in the form of questions within the “Speech Response” section. For example, “Let’s cheer you up! Would you like a joke or a movie quote?”

Finally, click the “Save” button next to your intent name to save your progress.

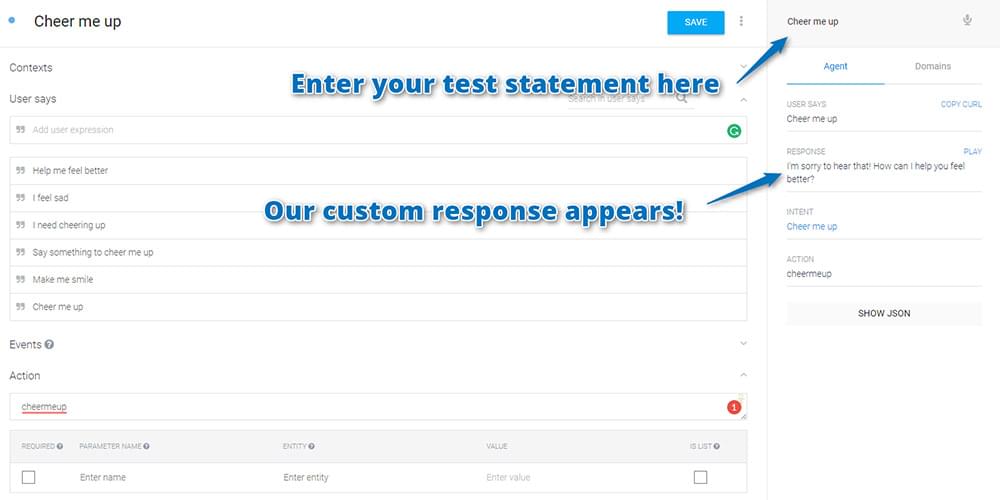

Testing Your Agent

You can test out your new intent by typing a test statement into the test console on the right. Test it out by saying “Cheer me up”:

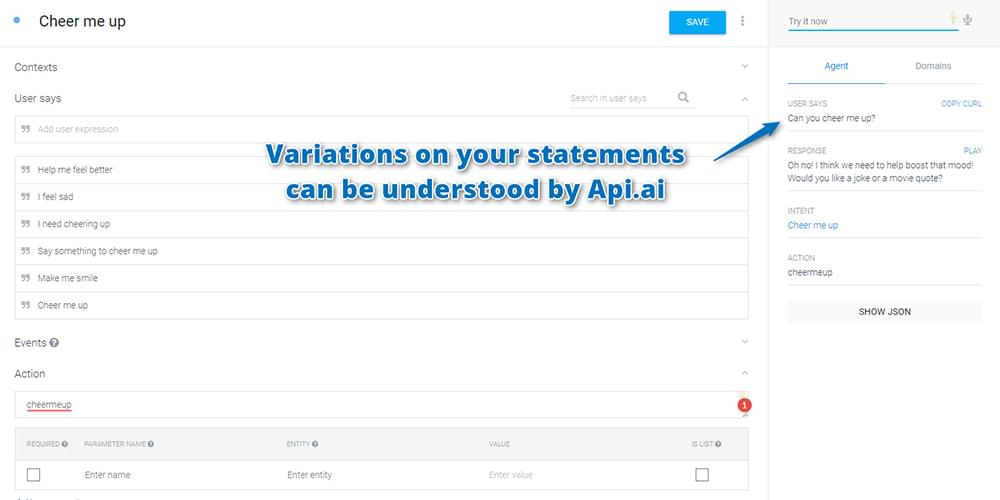

The agent responds back with one of your trained responses as intended. After you’ve provided the phrase once, Api.ai learns! It will then allow you to have variations on the phrasing of the statement. For example, “Make me smile please”, “Say something to make me smile” or “I feel sad right now” will result in your intent running too:

These variations seemed to only trigger after I’d used the original phrase with the bot first. I’m not sure if it’s just a delay in how long it takes to generate understanding of similar phrases, but if a variation doesn’t work, try asking it the original statement first. If your variation is too different, you’ll need to add it into the context’s statements. The more statements you add here, the better our agent will be able to respond.

One thing you might notice if you used a statement like “I’m sorry to hear that! How can I help you feel better?” is that it isn’t quite specific enough to guide the user. If they aren’t aware of the options of either “movie quote” or “joke”, then they might ask for something you haven’t covered! Over time, you can train up your agent to understand many other concepts. However, for now I’d recommend being specific with your questions!

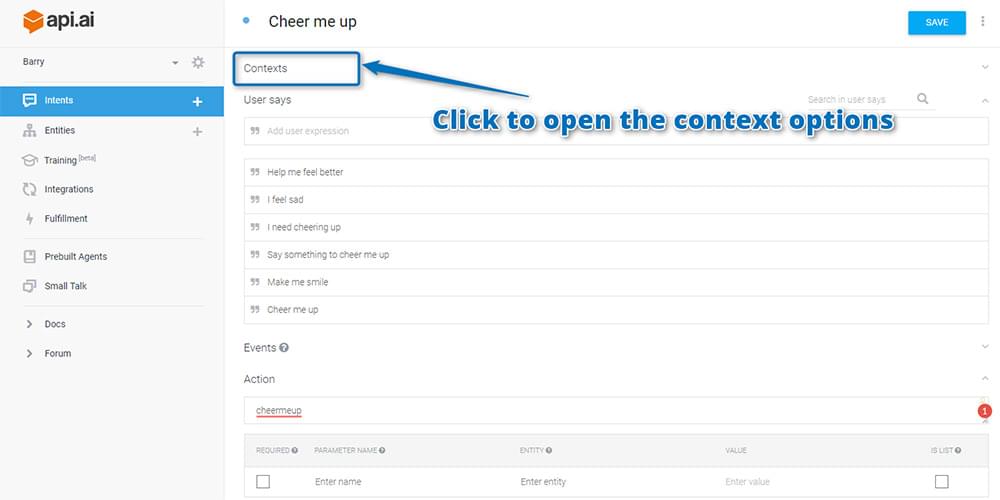

Using Contexts

By guiding the conversation with your speech response, your agent needs a way to follow what the conversation was about when the user next speaks to them. If a user says the words “A joke” or even “Either one” without any prior conversation, out of context that sentence might not be too clear for the agent to respond to. How would you respond if I talked up to you and just said “A joke”? That’d be what your assistant would be given at the moment, as it has no way of remembering where the conversation was leading previously.

This is where setting contexts in Api.ai comes in. We create contexts to track what the user and agent have been speaking about. Without contexts, each sentence would be completely isolated from the one before it.

To create a context, click the “Define contexts” link at the top of the Api.ai console for your intent:

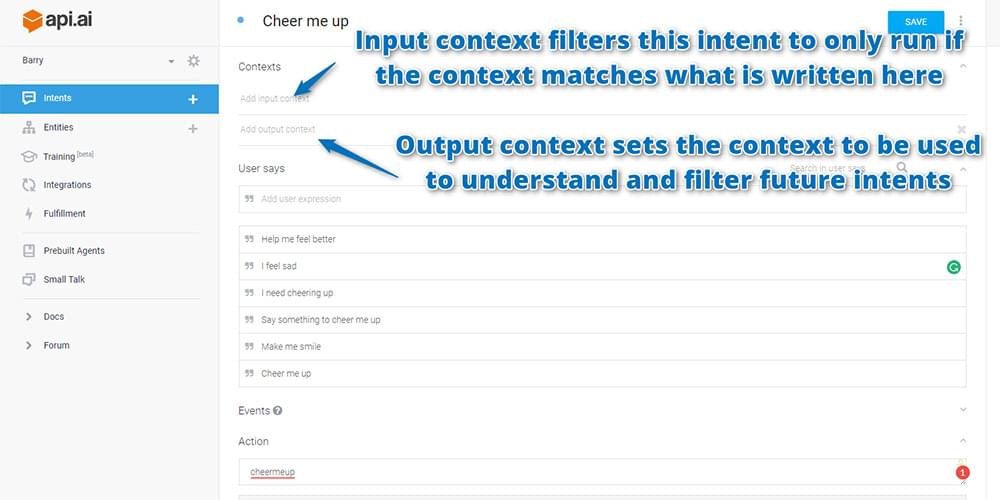

Here you’ll have a section for input contexts and a section for output context. Input contexts tell the agent in which context the intent should be run. For your first intent, you want it to run any time, so leave input contexts blank. Output contexts are what set up an intent to be picked up in future messages. This is the one you want:

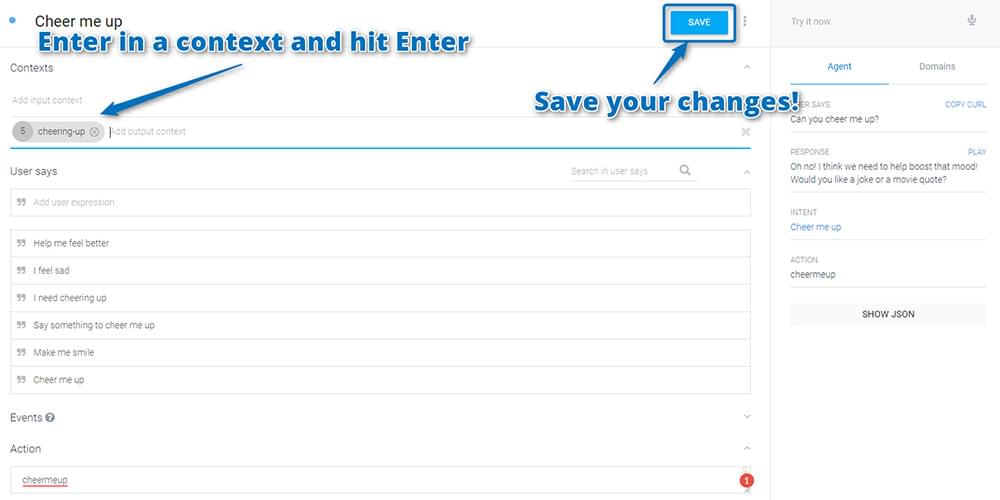

Now create an output context called “cheering-up”. When naming a context, Api.ai suggests alphanumeric names without spaces. Type in your context and hit the Enter key to add it. Then click “Save” to save your changes:

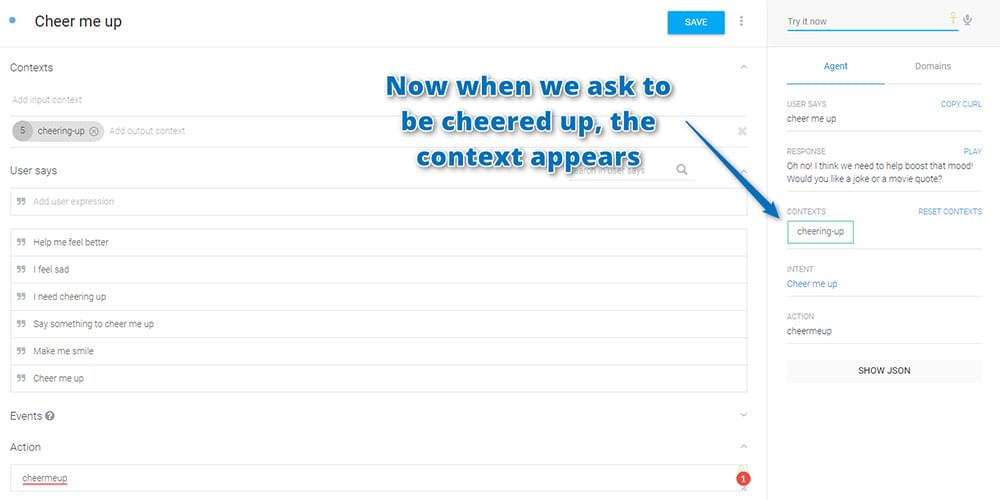

If you then test out your agent by asking them to “Cheer me up” once more, the result shows your context is now appearing too:

Filtering Intents With Contexts

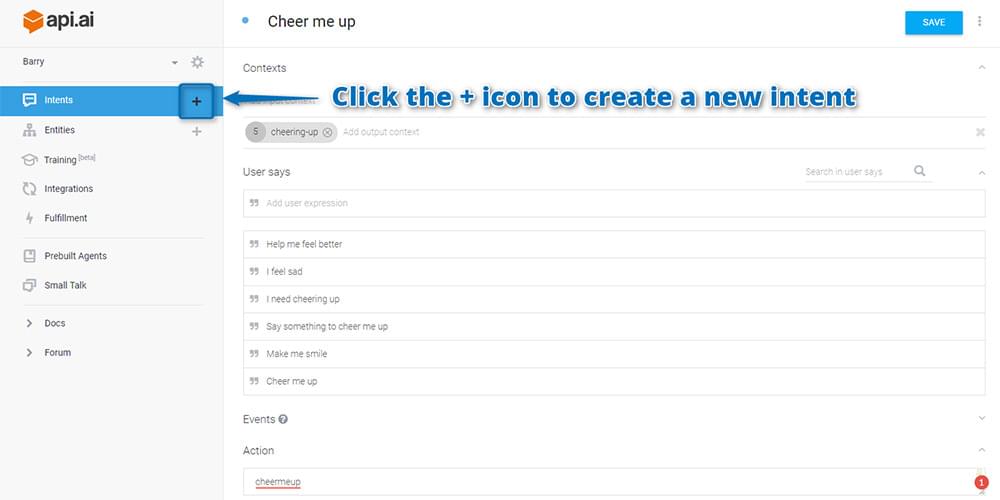

Your agent now understands that there’s a conversation context of “cheering-up”. You can now set up an intent to run only if that context has occurred. As an example, create one possible response to your agent’s question — “A movie quote”. Go back to the menu on the left and click the plus icon to create a new intent:

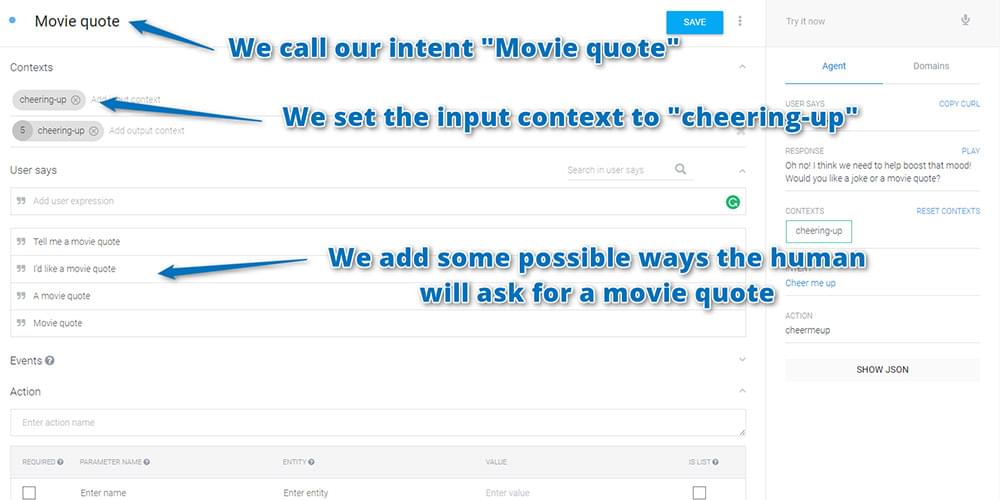

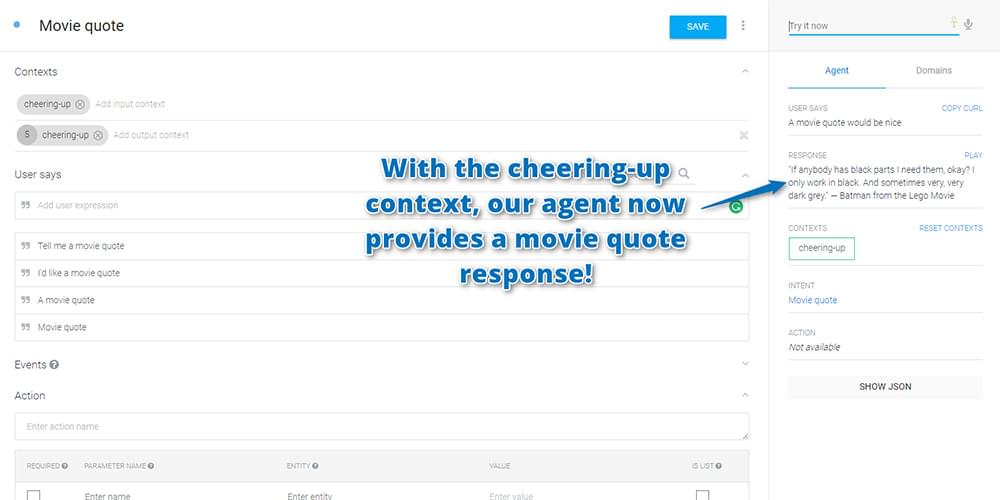

Call your intent “Movie quote” and set the input context to “cheering-up”. This tells your agent that they should only consider this response to our user if they have previously asked to be cheered up. We add a few sample ways the user might respond to say “I’d like a movie quote”:

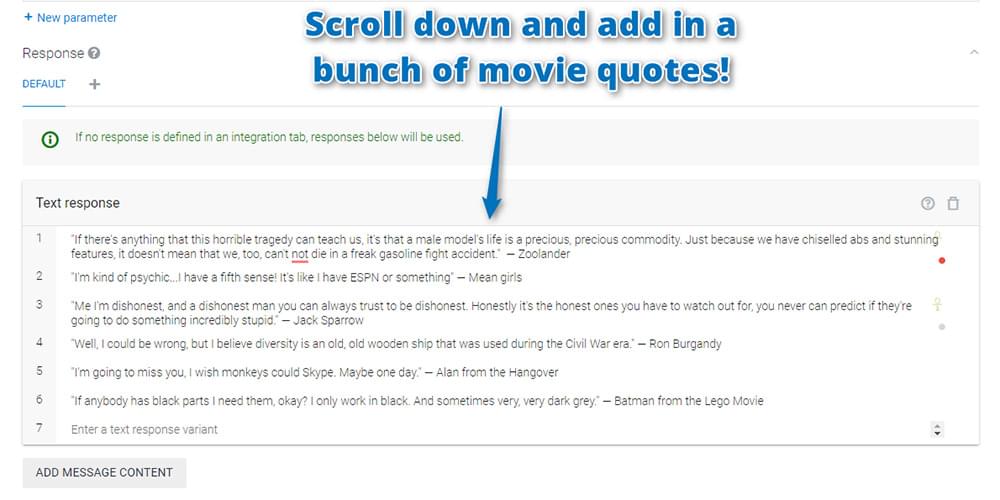

Then scroll down and in your response and include a range of movie quotes (feel free to include your own favorites):

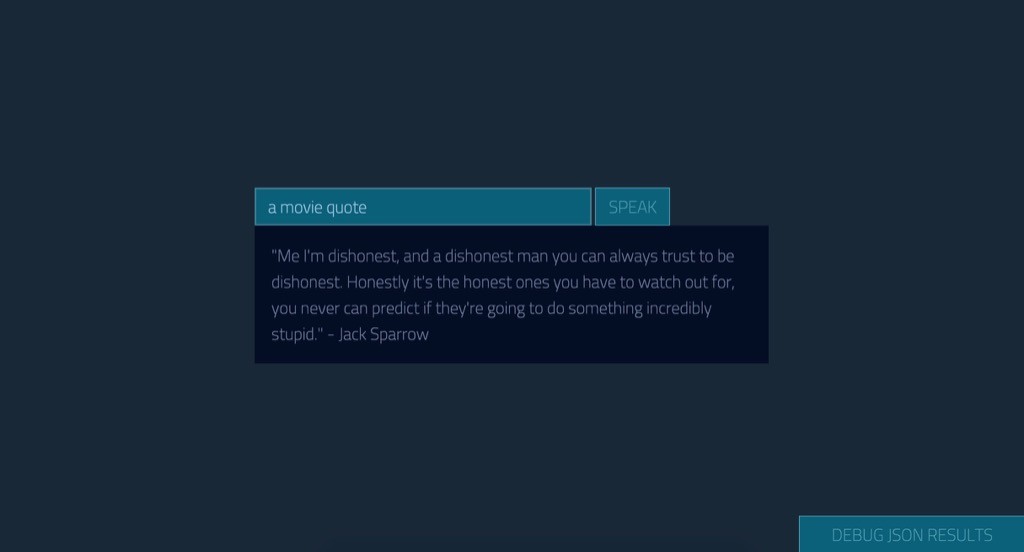

Click “Save” next to your intent’s name once again to create your movie quote intent. Then in the test console beside it, try entering “Cheer me up” and follow it with “Movie quote”. The agent should now tell you a movie quote!

You could then follow the same process to add a response for an “A joke” intent too.

You also don’t necessarily need to be limited to providing your agent with a list of hard coded responses. You could instead set an action name for each intent and respond to that action within your web app. This is another concept I’ll be covering in a future article! You can be prepared for future additions by giving your “Movie quote” intent an action called “cheermeup.moviequote” (the dot helps you ensure the action doesn’t get mixed up with any future generic “moviequote” action you add in).

In Action

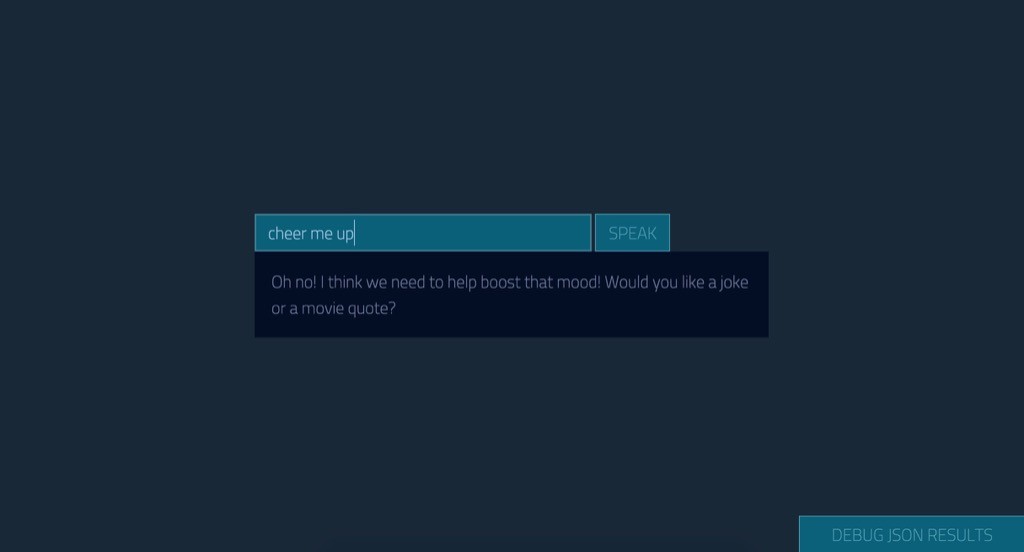

If you added these intents into the same personal assistant used for your web app in the previous article, the new functionality should appear automatically! If you created a new one, you’ll need to update the API keys in your web app first. Open up your personal assistant web app in your web browser and try it out by asking your assistant to cheer you up:

Then, tell it you’d like a movie quote and see what happens:

Conclusion

There are plenty of ways to use the concepts of intents and contexts to personalize your assistant. Chances are you already have a few ideas in mind! There’s still more we can do to train up our Api.ai assistant by teaching it to recognize concepts (known as entities) within your custom intents, which we cover in the next article in this series!

If you’re following along and building your own personal assistant using Api.ai, I’d love to hear about it! What custom intents have you come up with? Let me know in the comments below, or get in touch with me on Twitter at @thatpatrickguy.

Give your AI the human touch with a sentiment tool. Check out our screencasts on the Microsoft Cognitive Services and the Text Analytics API.

Frequently Asked Questions (FAQs) about Customizing Your API.AI Assistant with Intent and Context

How can I create my own AI assistant using API.AI?

Creating your own AI assistant using API.AI involves several steps. First, you need to sign up for an account on the API.AI website. Once you have an account, you can create a new agent, which is essentially your AI assistant. You can then customize your agent by defining intents and contexts. Intents are the actions that your agent can perform, while contexts control when certain intents can be triggered. You can also add entities, which are specific pieces of information that your agent can recognize and handle.

What are the differences between API.AI and other AI platforms?

API.AI, now known as Dialogflow, is a platform developed by Google that allows developers to build conversational interfaces for websites, mobile applications, popular messaging platforms, and IoT devices. It differs from other AI platforms in its ease of use, extensive integration options, and powerful machine learning capabilities. Unlike some platforms, API.AI supports both voice and text-based conversations and offers a wide range of pre-built agents for common use cases.

How can I use Python to build an AI assistant?

Python is a popular language for building AI assistants due to its simplicity and the availability of numerous libraries for machine learning and natural language processing. To build an AI assistant in Python, you would typically use a library like API.AI’s Python SDK, which provides methods for interacting with the API.AI service. You can then define your intents and contexts in Python code, and use the SDK to send user input to API.AI and receive the corresponding responses.

What are the benefits of customizing my AI assistant with intent and context?

Customizing your AI assistant with intent and context allows you to create a more natural and interactive user experience. By defining intents, you can control what actions your assistant can perform, and by using contexts, you can control when those actions are triggered. This allows your assistant to handle complex conversations and understand user input in a variety of formats and situations.

How can I add entities to my AI assistant?

Entities in API.AI are used to extract parameter values from user input. You can add entities to your AI assistant by defining them in the API.AI console or in your code. Once an entity is defined, you can use it in your intents to capture specific pieces of information from the user’s input. For example, if you have an intent that books flights, you might define entities for cities, dates, and times.

Can I integrate my AI assistant with other services?

Yes, API.AI provides integration options for a wide range of services, including popular messaging platforms like Facebook Messenger and Slack, as well as voice assistants like Google Assistant and Amazon Alexa. This allows you to make your AI assistant available to users on their preferred platforms.

How can I test my AI assistant?

API.AI provides a built-in testing console that you can use to interact with your AI assistant and see how it responds to different inputs. You can also use the API.AI SDKs to write test scripts in your preferred programming language.

What are some common use cases for AI assistants?

AI assistants can be used in a wide range of applications, from customer service and support, to personal productivity, to home automation. For example, an AI assistant could help users book flights, answer questions about a product, or control smart home devices.

How does machine learning work in API.AI?

API.AI uses machine learning to understand user input and determine the best response. When you define an intent, you provide examples of user input that should trigger that intent. API.AI uses these examples to train a machine learning model, which it then uses to match future user input to the appropriate intent.

Can I use API.AI to build a voice assistant?

Yes, API.AI supports both text and voice input, making it a great choice for building voice assistants. You can integrate your API.AI agent with voice platforms like Google Assistant and Amazon Alexa, or you can use the API.AI SDKs to add voice capabilities to your own applications.

Patrick Catanzariti

Patrick CatanzaritiPatCat is the founder of Dev Diner, a site that explores developing for emerging tech such as virtual and augmented reality, the Internet of Things, artificial intelligence and wearables. He is a SitePoint contributing editor for emerging tech, an instructor at SitePoint Premium and O'Reilly, a Meta Pioneer and freelance developer who loves every opportunity to tinker with something new in a tech demo.